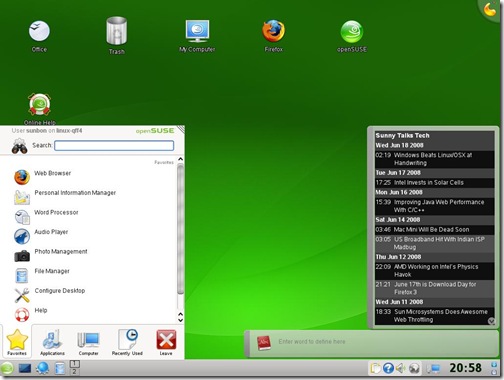

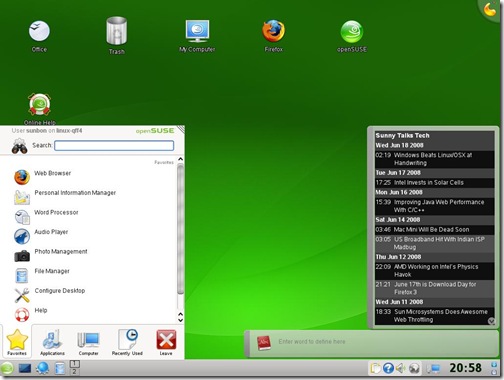

On the 19th of June 2008, openSuSE 11.0 was released and I was very excited about the new release because my experience with openSuSE 10.3 has been very good and I have been following the development of openSuSE 11.0 closely. In the meantime, I have tried Ubuntu 8.04, Kubuntu, Fedora 9, openSolaris 2008.05, but somehow I’ve been coming back to openSuSE 10.3 because of some or the other nagging problem with the other distros...

Download

I downloaded openSuSE 11.0 the moment it was released and I have to say that the release was very professionally co-ordinated. There were launch events all around the globe where people received their openSUSE 11.0 DVDs and with the counter running all the time, everyone knew when to get their download managers ready. The mirrors were fast and the torrents seemed to have enough seeders. I finished the 4.3GB DVD iso by 20th morning (IST) in just about 4hrs time. There is also a single Live CD KDE iso, GNOME iso as well as a MiniCD (71 MB) for Network installation...

Installation: Image-based Deployment and Sleek

The openSuSE site has a nice installation guide with screenshots and it doesn’t make sense for me to go through the same thing again. But two things are special in openSuSE 11.0 that are worth mention. The first is that they have a gorgeous installation GUI, the best looking installation for any operating system ever!! Its easy to install and intuitive. The second the use of image deployment for the installation of GNOME. This really speeds up the installation if you are just using the basic GNOME-based setup. I generally prefer KDE, but for the test I installed the GNOME and it was fast... really really fast! I was shown the GNOME desktop with all the preferred software installed in straight 15 minutes. That’s faster than any other distro that I’ve ever installed. It was an amazing experience to see such a fast installation!

Like previous version, openSuSE 11.0 comes with a variety of useful non-opensource software like flashplayer, java 1.6.0_u6, fonts, Adobe reader 8, etc. Along with these I also installed Jdk6 update 10 (the awesome new Java Plugin), Mono, Netbeans 6.1, GlassFish for my OpenMRS performance test... KDE 4.0 is also there as a separate choice of GUI when installing along with KDE 3.5.9, GNOME 2.22. Since I have never been able to stably run KDE 4.0 and have always switched back to KDE 3.5, I thought I’d try KDE 4.0 in openSUSE 11.0

I was pleasantly surprised that KDE 4.0 “just worked”. I had my first KDE 4.0 crash after 1.5 hrs of use whereas earlier it was before 20 min that the SigEnv or Segmentation Fault would throw up. I still didn’t want any crashes and hence I’m back to using KDE 3.5.9. But KDE 4 is really coming good!

As soon as I finished installing, everyone at home wanted me to record the Euro 2008 matches and soon I needed VLC to be installed. I went to videolan.org/vlc and clicked on the SuSE link... and I was greeted with a 1-Click Install button.

This was one of the really awesome openSUSE things that was first brought in openSUSE 10.3 and has been improved in openSuSE 11.0. I clicked on it and the installation was finished really quickly.

Improved Installation with YaST

That’s when I realized the most important update to openSuSE 11.0 which is the improved speed of YaST. No other distro has such an easy administration tool where nearly everything can be administered. And in openSuSE 11.0 everything in the YaST module just works. RPM installation is fast and adding community repositories is easy. I am a big fan of apt-get in Ubuntu, but openSUSE 11.0 software installation is just as easy now...

Every piece of hardware worked

I have lots of hardware, old and new on which I often install and test different distros, Windows, OSx86 etc. openSuSE worked with every bit of hardware that was thrown at it out of the box. Every distro struggled with the UMTS 3G card on a laptop, but surprisingly openSuSE 11.0 made it work. Few other distros had trouble with the legacy Nvidia Quadro GoGL card on another laptop, but openSuSE 11.0 worked... Old printers, USB devices, Firewire everything worked. Even the Barcode Reader with PS2-USB converter worked on the USB port which wouldn’t work on Ubuntu 8.04 or other newer distros.

The only change was that on my desktop Intel DG965RY board the surround sound wasn’t working. I followed the Audio Troubleshooting doc, added the model=dell-3stack and all my speakers started trumpeting!

Compiz-Fusion and the Bling

Last time I was not happy with the stability of Compiz-Fusion on openSuSE 10.3. For Ubuntu 8.04, Compiz-Fusion worked well and so I knew it was something to do with the new kernel module driver on my system. With openSuSE 11.0, Compiz-Fusion works perfectly and is able to show all its features. A nice little configuration screen helps manage the amount of effects that you wish to enable. I personally don’t enable effects, but its a good show-off to make people standup and appreciate open-source beauty.

Other features and improvements

- Linux kernel 2.6.25

- glibc 2.8

- GCC 4.3

- 200 other new features

Conclusion

You can’t miss the ease of use and the sleek looks that openSuSE 11.0 brings to the desktop. Its the perfect distro for a new user coming to linux. For the old pros, openSUSE 11.0 is fast and brings in ease of administration and software installation. Novell support is pretty good for big organizations that can buy a boxed product from them. Xen is my favorite for virtualization and it has good integration and management in YaST. But the strength and momentum of openSUSE is definitely in the desktop space. Earlier, openSuSE lacked the community backing that Ubuntu has generated in a short timespan, but with new initiatives and better responses at openSuSE forums, the openSuSE community and grown leaps and bounds. openSuSE 11.0 has grown from strength to strength and is one of the best ways to give competition to Windows on the desktop!

Other screenshots

The GNOME Desktop The GNOME Desktop |  The KDE Desktop The KDE Desktop |

Compiz-Fusion Cube Atlantis Plugin Compiz-Fusion Cube Atlantis Plugin |  Compiz-Fusion Burn Animation Compiz-Fusion Burn Animation |

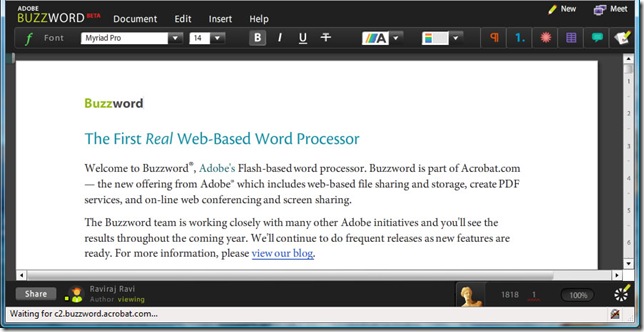

actually means that AMD is going to bring back the good ‘ol TV Tuner + graphics card that ATI used to sell under the All-In-Wonder line of products. The new ATI All-In-Wonder card is a PCI-Express 2.0, has the HD3650 GPU and Theater 650 Pro TV Tuner.

actually means that AMD is going to bring back the good ‘ol TV Tuner + graphics card that ATI used to sell under the All-In-Wonder line of products. The new ATI All-In-Wonder card is a PCI-Express 2.0, has the HD3650 GPU and Theater 650 Pro TV Tuner.